Advanced Techniques - Importing XML data via Data transfer

Seeing as XML files can have a variety of structures, you can't get around having to write an individual import script every time you import an XML file. Intrexx provides you with the ability to use a corresponding Groovy script as a data source when using Data transfer.Analyzing the XML structure

For this example, an application is exported with data. Change the file ending lax of the exported file to zip, so that you can then unpack the file. Intrexx saves the data in the application export as an XML. The directory, where you can find the corresponding data file in the unpacked export file is generated by Intrexx as follows:Application-name\Application-GUID\data

In this folder, the XML files, which contain the data, are named according to the corresponding data groups found in the exported application - e.g. XDATAGROUP00D1A218.data. The XML file's structure looks something like this:

<dump>

<schema>

…

</schema>

<data>

<r>

<c n="lid">1</c>

<c n="luserid">1</c>

<c n="luseridinsert">1</c>

<c n="dtinsert"> 2016-11-24T08:59:42.064Z</c>

<c n="dtedit"> 2016-11-24T08:59:42.064Z</c>

<c n="str_title_6a585d30">First entry</c>

</r>

…

</data>

</dump>

Writing the Groovy script

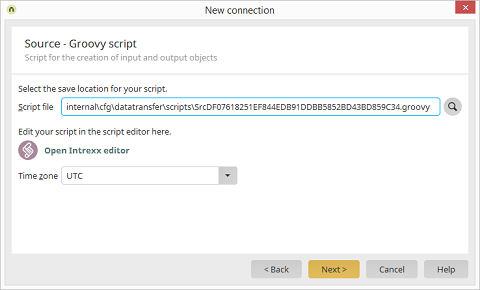

When setting up the Data transfer, the data source must be changed to Groovy script.

Click on Next.

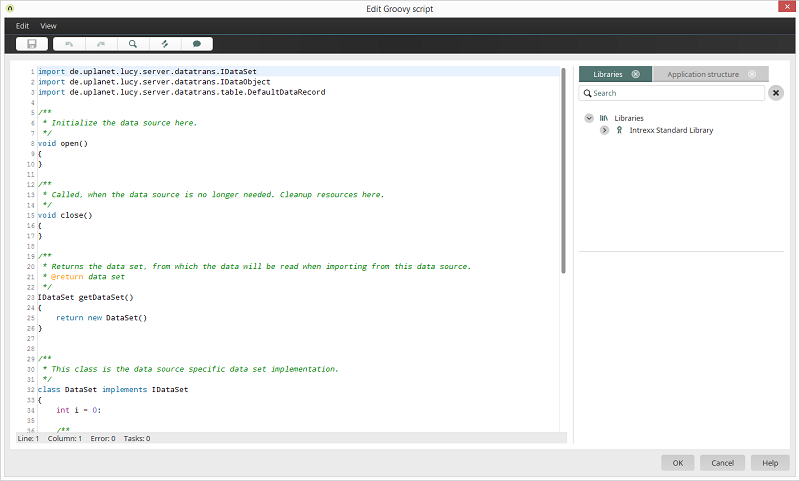

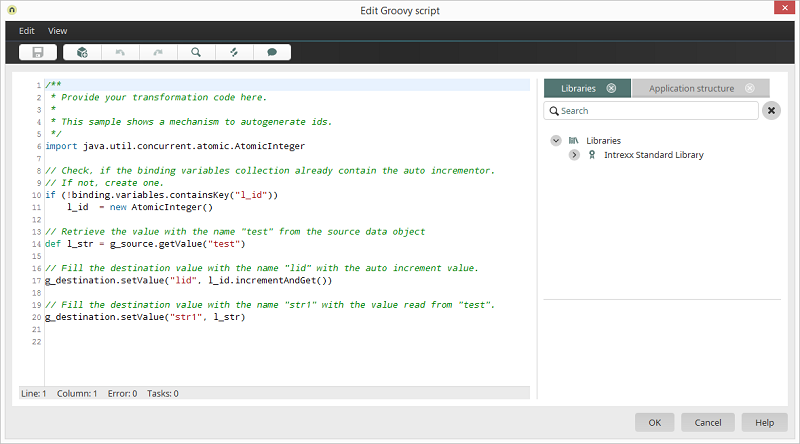

To start with, Intrexx generates a dummy script that's kept general and not specifically intended for XML data. This script just needs to be amended now. Open the Intrexx Editor by clicking on the corresponding link.

First of all, a method parseXML needs to be added to the class DataSet which will initially load the content, independent of the actual type, as text to a cache. When creating the DataSet, it's mandatory that this is also called up in the method getDataSet before the generated object can be returned. Subsequently, the method next will be adjusted so that the values in the cache are converted to the actually used data type. In this example the correct Java data types can be found in the XML file itself, the data type of each data field is specified in the schema section. Here is the completed script for the source:

import de.uplanet.lucy.server.datatrans.IDataSet

import de.uplanet.lucy.server.datatrans.IDataObject

import de.uplanet.lucy.server.datatrans.table.DefaultDataRecord

import java.text.SimpleDateFormat

import java.util.TimeZone

void open(){}

void close(){}

/**

* Returns the data set, from which the data will be read when importing from this data source.

* @return data set

*/

IDataSet getDataSet()

{

def ds = new DataSet()

ds.parseXML()

return ds

}

/**

* This class is the data source specific data set implementation.

*/

class DataSet implements IDataSet

{

def sdf = new SimpleDateFormat("yyyy-MM-dd'T'HH:mm:ss.SSS'Z'")

def fileContents = new File("C:\\XML_SOURCE_DATA.data").getText("UTF-8")

def content = new XmlParser().parseText(fileContents)

def xmlRows = content.data.r

def rows = []

def i = 0

void parseXML(){

for(xmlRow in xmlRows)

{

def row = [:]

def xmlColumns = xmlRow.c

for(xmlColumn in xmlColumns)

{

def strColumnName = xmlColumn."@n"

def strValue = xmlColumn.text()

row.put(strColumnName, strValue)

}

rows.add(row)

}

}

IDataObject next(){

if (i >= rows.size()) return null;

sdf.setTimeZone(TimeZone.getTimeZone("UTC"))

def l_record = new DefaultDataRecord(null, [

"lid":java.lang.Integer,

"luserid":java.lang.Integer,

"luseridinsert":java.lang.Integer,

"dtinsert":java.sql.Timestamp,

"dtedit":java.sql.Timestamp,

"str_title":java.lang.String,

])

def row = rows[i]

l_record.setValue("lid", Integer.parseInt(row.get("lid")))

l_record.setValue("luserid", Integer.parseInt(row.get("luserid")))

l_record.setValue("luseridinsert", Integer.parseInt(row.get("luseridinsert")))

l_record.setValue("dtinsert", new java.sql.Timestamp(sdf.parse(row.get("dtinsert")).getTime()))

l_record.setValue("dtedit", new java.sql.Timestamp(sdf.parse(row.get("dtedit")).getTime()))

l_record.setValue("str_title", row.get("str_title"))

i++;

return l_record

}

void close(){}

}

| Please note with this method that the XML file is initially loaded to the cache in its entirety and then processed afterwards. This can lead to a buffer overflow when using large files, this is why a more specialized method should be used in this case. |

Copy the example script and add it in the Editor. Then click on Next.

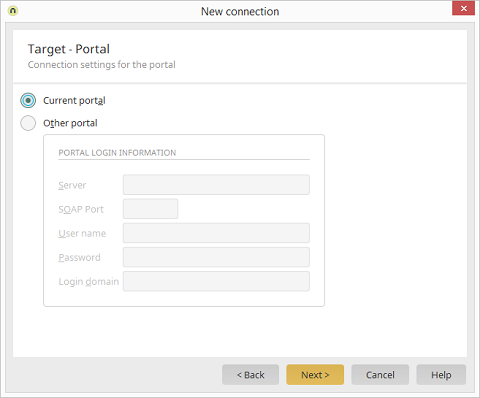

Select the target for the import and then click on Next.

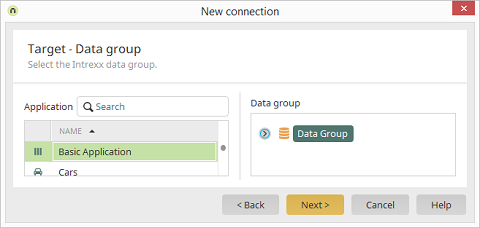

Select the data group that the data should be imported into. Click on Next.

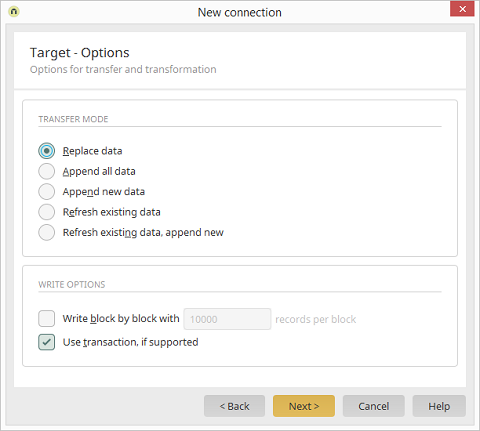

Finally, the data needs to be transformed. To do that, the setting Use transaction, if supported needs to be activated. Click on Next.

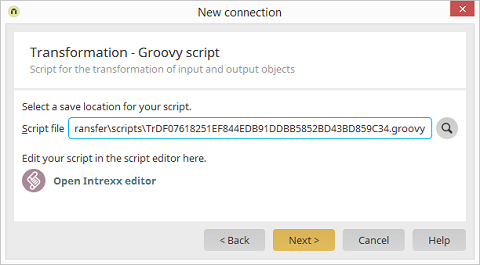

Open the Intrexx Editor.

The wizard for creating the data transfer has already adjusted the field for transformation accordingly so that a Groovy script can now be specified here as well. The transformation assigns data fields from the source to the data fields in the target. If you require your own IDs for the import, then this is point when these should be generated and assigned to the ID field. This isn't necessary in this example, meaning that the source and target fields can simply be assigned accordingly. Here is the Groovy script for the transformation:

g_destination.setValue("lid", g_source.getValue("lid"))

g_destination.setValue("luserid", g_source.getValue("luserid"))

g_destination.setValue("luseridinsert", g_source.getValue("luseridinsert"))

g_destination.setValue("dtinsert", g_source.getValue("dtinsert"))

g_destination.setValue("dtedit", g_source.getValue("dtedit"))

g_destination.setValue("str_title", g_source.getValue("str_title"))

| If data isn't transferred, please check the use of upper and lower case in the Groovy script. Depending on the server type, the use of upper and lower case my need to be considered both in the formulation of the source as well as the transformation. |